Given recent evidence of the irreproducibility of a surprising number of published scientific findings, the White House’s Office of Science and Technology Policy (OSTP) sought ideas for “leveraging its role as a significant funder of scientific research to most effectively address the problem”, and announced funding for projects to “reset the self-corrective process of scientific inquiry”. (first noted in this post.)

I was sent some information this morning with a rather long description of the project that received the top government award thus far (and it’s in the millions). I haven’t had time to read the proposal*, which I’ll link to shortly, but for a clear and quick description, you can read the excerpt of an interview of the OSTP representative by the editor of the Newsletter for Innovation in Science Journals (Working Group), Jim Stein, who took the lead in writing the author check list for Nature.

Stein’s queries are in burgundy, OSTP’s are in blue. Occasional comments from me are in black, which I’ll update once I study the fine print of the proposal itself.

Your office has been inundated with proposals since announcing substantial funding for projects aimed at promoting the self-corrective process of scientific inquiry, and ensuring aggregate scientific reproducibility. I understand the top funded endeavor has been approved by over 40 stakeholders, including editors of leading science journals, science agencies, patient-advocacy groups, on line working groups, post publication reviewers, and researchers themselves. Can you describe this funded initiative and what it will mean for scientists?

OSTP: Not all the details are in place, but the idea is quite simple. We will start with a pilot program to control aggregate retractions of articles due to problematic or irreproducible results across the sciences. In Phase 1, each journal is given the rights to anywhere from 8-18 retractions every 4 years.

I believe that Nature had around 14 retractions total in barely two years in 2013-14. What do they do when they’ve come close to the capped limit?

OSTP: They can purchase retraction rights from a journal that hasn’t used up its limit. In other words, journals with solid publications and low retractions can sell their retraction rights (or “offsets”) to journals throughout the period. We think this is a sound way to ensure aggregrate replicability and instill researcher responsibility.

So this is something like trading carbon emissions?

OSTP: Some aspects are redolent of those enterprises, and we have leading economists on the pilot Phase 1, but the outcome is entirely in the control of the journal, authors and reviewers. Emissions are largely necessary consequences of industrial activities, retractions are not.

____________

Oh my, and I had thrown out an idea like this facetiously. Here’s a picture that just came to mind of a retraction offset certificate:

I don’t mean to poke fun at this effort. It’s a dramatic step, and I certainly endorse the last couple of ideas below.

OSTP: As an alternative to purchasing retraction rights from another journal, an editor may seek to withdraw articles as a precautionary measure… [with some algorithm as yet to be worked out]. Given the low rates of reproducibiity, for instance, that scientists at Amgen found “scientific findings were confirmed in only 11% cases, we think something like 30% -50% precautionary withdrawals might be the norm, at least at the outset.

Can papers accepted for publication be withdrawn by journal editors even without grounds that reach the level of retraction, or perhaps are even problem-free? How will authors cope with this uncertainty?

OSTP: According to the new rules, if papers are accepted and approved in final form, authors grant withdrawal rights to journals for a probationary period of 4 years, alongside other rights they currently bestow publishers. Accepted papers are cross-validated against holdout data, and other means, by independent monitoring groups. Once the paper is written and accepted, an independent group uses the holdout data set to verify the claim or not and add an appendix to the paper. Failed replication is not grounds for retraction, but a journal might seek precautionary withdrawal of such a paper. On-line sources, post-publication peer review, for example, could also provide sources of information leading to a PW [precautionary withdrawal].

Precautionary withdrawal is an entirely new concept for all of us. Might a paper be withdrawn by a journal even lacking grounds to suspect the integrity of the work?

OSTP: Strictly speaking yes, but only during the (4 year) probationary period. Let me be clear, editors from leading journals are fully behind this, they themselves told the NIH that having a holdout data set is the key. The nice thing about it is that papers subject to precautionary withdrawal are not subject to the stigma often associated with retractions, whether for honest error, flawed information, incomplete methodology, or scientific misconduct.

On the other hand, a retraction, under this new plan, in serious cases, could well lead researchers and institutions to be required to refund federal funds involved. Impact factors are also likely to be diminished following retractions but not following withdrawals [with an algorithm to be developed by members of Retraction Watch].[See Time for a Retraction Penalty? i]

Are there carrots as well as sticks?

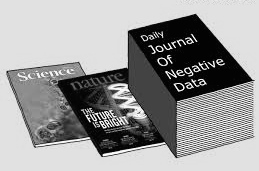

OSTP: Quite a few. For one thing, journals will be rewarded for publishing articles critically appraising failed methodologies, biases, and dead-ends, what didn’t work and why? ‘How not to go about research of a given type’ will be a newly funded area in NSF, NIH and other agencies and societies. This kind of “meta” research will be a new field in which young scientists are well placed to achieve respectable publication credentials.We’re really excited about this.

Yes, journals have often been loathe to publish failed results and negative data; hence the file-drawer problem.

OSTP: They will now, because the number of retraction offsets a journal is granted goes up dramatically with the percentage of critical meta-research. There will be other perks to incentivize this critical meta-research as well. We’re not talking merely of negative results mind you, but substantive and systematic analyses of deceptive results and a demonstration of how they’ve misled subsequent research, led to flawed clinical trials (and even deaths), and created obstacles to cumulative knowledge.

Ah, the meta-research on failed attempts is a great idea! (I will see if I can apply to contribute to this. Seriously.)

OSTP: The truth is, we currently have whole fields that have been created around results that have been retracted!

Nobody knew?

OSTP: Apparently not, and nobody bothered to check before “building” on piles of sand [see i]. Now the truth will out, but in a positive, constructive, truth-seeking manner. In this connection, another idea in the works is to incentivize the public to improve science and recover wasted federal funds by allowing a percentage of recovered funds to be paid to those who first provide on-line evidence of scientific misconduct leading to retraction (by whatever explicit definition).

This is really going to shake up the system.

OSTP: Frankly, we are left with no option. Of course this is just one of several pilot projects to be explored through government funding over several years.

Is there a general name for the new project?

OSTP: The word “paradigm” is overworked, so we have adopted “framework”; a new Framework to Ensure Aggregate Reproducibility.

Given that there are a few more sticks than carrots, this seems an apt name: Framework to Ensure Aggregate Reproducibility (FEAR)? Was it on purpose, do you suppose?

————-

*I’m about to board a plane, and won’t get to update this for several hours.

[i]The footnote in the interview was to a paper by Adam Marcus and Ivan Oransky (from Retraction Watch), in Labtimes (03/2014) Time for a Retraction Penalty? “There’s evidence, in fact, that Cell, Nature and Science would suffer the most from such penalties, since journals with high impact factors tend to have higher rates of retraction, as Arturo Casadevall and Ferric Fang showed in a 2011 paper in Infection and Immunity…Journals might also get points for raising awareness of their retractions, in the hope that authors wouldn’t continue to cite such papers as if they’d never been withdrawn – an alarming phenomenon that John Budd and colleagues have quantified and that seems to echo the 1930s U.S. Works Progress Administration employees, being paid to build something that another crew is paid to tear down. After all, if those citations don’t count toward the impact factor, journals wouldn’t have an incentive to let them slide”.

April 2 addition: Check date!

Many people wrote that they were utterly convinced for most of the day—but my 4/1 posts are always inclined to be almost true, true in part, and they may well even become true. I’m still rooting for critical meta-research. You’re still free to comment on why the FEAR would or would not work.

Filed under: junk science, reproducibility, science communication, Statistics