I thought the criticisms of social psychologist Jens Förster were already quite damning (despite some attempts to explain them as mere QRPs), but there’s recently been some pushback from two of his co-authors Liberman and Denzler. Their objections are directed to the application of a distinct method, touted as “Bayesian forensics”, to their joint work with Förster. I discussed it very briefly in a recent “rejected post“. Perhaps the earlier method of criticism was inapplicable to these additional papers, and there’s an interest in seeing those papers retracted as well as the one that was. I don’t claim to know. A distinct “policy” issue is whether there should be uniform standards for retraction calls. At the very least, one would think new methods should be well-vetted before subjecting authors to their indictment (particularly methods which are incapable of issuing in exculpatory evidence, like this one). Here’s a portion of their response. I don’t claim to be up on this case, but I’d be very glad to have reader feedback.

Nira Liberman, School of Psychological Sciences, Tel Aviv University, Israel

Markus Denzler, Federal University of Applied Administrative Sciences, Germany

June 7, 2015

Response to a Report Published by the University of Amsterdam

The University of Amsterdam (UvA) has recently announced the completion of a report that summarizes an examination of all the empirical articles by Jens Förster (JF) during the years of his affiliation with UvA, including those co-authored by us. The report is available online. The report relies solely on statistical evaluation, using the method originally employed in the anonymous complaint against JF, as well as a new version of a method for detecting “low scientific veracity” of data, developed by Prof. Klaassen (2015). The report concludes that some of the examined publications show “strong statistical evidence for low scientific veracity”, some show “inconclusive evidence for low scientific veracity”, and some show “no evidence for low veracity”. UvA announced that on the basis of that report, it would send letters to the Journals, asking them to retract articles from the first category, and to consider retraction of articles in the second category.

After examining the report, we have reached the conclusion that it is misleading, biased and is based on erroneous statistical procedures. In view of that we surmise that it does not present reliable evidence for “low scientific veracity”.

We ask you to consider our criticism of the methods used in UvA’s report and the procedures leading to their recommendations in your decision.

Let us emphasize that we never fabricated or manipulated data, nor have we ever witnessed such behavior on the part of Jens Förster or other co-authors.

Here are our major points of criticism. Please note that, due to time considerations, our examination and criticism focus on papers co-authored by us. Below, we provide some background information and then elaborate on these points.

- The new method is falsely portrayed as “standard procedure in Bayesian forensic inference.” In fact, it is set up in such a way that evidence can only strengthen a prior belief in low data veracity. This method is not widely accepted among other experts, and has never been published in a peer-reviewed journal.

Despite that, UvA’s recommendations for all but one of the papers in question are solely based on this method. No confirming (not to mention disconfirming) evidence from independent sources was sought or considered.

- The new method’s criteria for “low veracity” are too inclusive (5-8% chance to wrongly accuse a publication as having “strong evidence of low veracity” and as high as 40% chance to wrongly accuse a publication as showing “inconclusive evidence for low veracity”). Illustrating the potential consequences, a failed replication paper by other authors that we examined was flagged by this method.

- The new method (and in fact also the “old method” used in former cases against JF) rests on a wrong assumption that dependence of errors between experimental conditions necessarily indicates “low veracity”, whereas in real experimental settings many (benign) reasons may contribute to such dependence.

- The reports treats between-subjects designs of 3 x 2 as two independent instances of 3-level single-factor experiments. However, the same (benign) procedures may render this assumption questionable, thus inflating the indicators for “low veracity” used in the report.

- The new method (and also the old method) estimate fraud as extent of deviation from a linear contrast. This contrast cannot be applied to “control” variables (or control conditions) for which experimental effects were neither predicted nor found, as was done in the report. The misguided application of the linear contrast to control variables also produces, in some cases, inflated estimates of “low veracity”.

- The new method appears to be critically sensitive to minute changes in values that are within the boundaries of rounding.

- Finally, we examine every co-authored paper that was classified as showing “strong” or “inconclusive” evidence of low veracity (excluding one paper that is already retracted), and show that it does not feature any reliable evidence for low veracity.

Background

On April 2nd each of us received an email from the head of the Psychology Department at the University of Amsterdam (UvA), Prof. De Groot, on behalf of University’s Executive Board. She informed us that all the empirical articles by Jens Förster (JF) during the years of his affiliation with UvA, including those co-authored by us, have been examined by three statisticians who submitted their report. According to this (earlier version of the) report, we were told, some of the examined publications had “strong statistical evidence for fabrication”, some had “questionable veracity,” and some showed “no statistical evidence for fabrication”. Prof. De Groot also wrote that on the basis of that report, letters would be sent to the relevant Journals, asking them to retract articles from the first two categories. It is important to note that this was the first time we were officially informed about the investigation. None of the co-authors had been ever contacted by UvA to assist with the investigation. The University could have taken interest in the data files, or in earlier drafts of the papers, or in information on when, where and by whom the studies were run. Apparently, however, UvA’s Executive Board did not find any of these relevant for judging the potential veracity of the publications and requesting retraction.

Only upon repeated requests, on April 7th, 2015 we received the 109-page report (dated March 31st, 2015) and were given 2.5 weeks to respond. This deadline was determined one-sidedly. Also, UvA did not provide the R-code used to investigate our papers for almost two weeks (until April 22nd), despite the fact that it was listed as an attachment to the initial report. We responded on April 27th, following which the authors of the report corrected it (henceforth Report-R) and wrote a response letter (henceforth, the PKW letter, after authors Peeters, Klaassen, and de Wiel ). Both documents are dated May 15, 2015, but were sent to us only on June 2, the same day that UvA also published the news regarding the report and its conclusions on its official site, and the final report was leaked. Thus, we were not allowed any time to read Report-R or the PKW letter before the report and UvA’s conclusions were made public. These and other procedural decisions by the UvA were needlessly detrimental to us.

The present response letter refers to Report-R. The R-Report is almost unchanged compared to the original report, except that the language of the report and the labels for the qualitative assessments of the papers is somewhat softened, to refer to “low veracity” rather than “fraud” or “manipulation”. This has been done to reflect the authors’ own acknowledgement that their methods “cannot demarcate fabrication from erroneous or questionable research practices.” UvA’s retraction decisions only slightly changed in response to this acknowledgement. They are still requesting retraction of papers with “strong evidence for low veracity”. They are also asking journals to “consider retraction” for papers with “inconclusive evidence for low veracity,” which seems not to match this lukewarm new label (also see Point 2 below about the likelihood for a paper to to receive this label erroneously).

Unlike our initial response letter, this letter is not addressed to UvA, but rather to editors who read Report-R or reports about it. To keep things simple, we will refer to the PKW letter by citing from it only when necessary. In this way, a reader can follow our argument by reading Report-R and the present letter, but is not required to also read the original version of the report, our previous response letter, and the PKW letter.

Because of time pressure, we decided to respond only to findings that concerned co-authored papers, excluding the by-now-retracted paper Förster and Denzler (2012, SPPS). We therefore looked at the general introduction of Report-R and at the sections that concern the following papers:

In the “strong evidence for low veracity” category

Förster and Denzler, 2012, JESP

Förster, Epstude, and Ozelsel, 2009, PSPB

Förster, Liberman, and Shapira, 2009, JEP:G

Liberman and Förster, 2009, JPSP

In the “inconclusive evidence for low veracity” category

Denzler, Förster, and Liberman, 2009, JESP

Förster, Liberman, and Kuschel, 2008, JPSP

Kuschel, Förster, and Denzler, 2010, SPPS

This is not meant to suggest that our criticism does not apply to the other parts of Report-R. We just did not have sufficient time to carefully examine them. We would like to elaborate now on points 1-7 above and explain in detail why we think that UvA’s report is biased, misleading, and flawed.

- The new method by Klaassen (2015) (the V method) is inherently biased

Report-R heavily relies on a new method for detecting low veracity (Klaassen, 2015), whose author, Prof. Klaassen, is also one of the authors of Report-R (and its previous version).

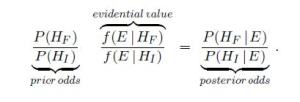

In this method (which we’ll refer to as the V method), a V coefficient is computed and used as an indicator of data veracity. V is called “evidential value” and is treated as the belief-updating coefficient in Bayes formula, as in equation (2) in Klaassen (2015)  For example, according to the V method, when we examine a new study with V = 2, our posterior odds for fabrication should be double the prior odds. If we now add another study with V = 3, our confidence in fabrication should triple still. Klaassen, 2015, writes “When a paper contains more than one study based on independent data, then the evidential values of these studies can and may be combined into an overall evidential value by multiplication in order to determine the validity of the whole paper” (p. 10).

For example, according to the V method, when we examine a new study with V = 2, our posterior odds for fabrication should be double the prior odds. If we now add another study with V = 3, our confidence in fabrication should triple still. Klaassen, 2015, writes “When a paper contains more than one study based on independent data, then the evidential values of these studies can and may be combined into an overall evidential value by multiplication in order to determine the validity of the whole paper” (p. 10).

The problem is that V is not allowed to be less than unity. This means that there is nothing that can ever reduce confidence in the hypothesis of “low data veracity”. The V method entails, for example, that the more studies there are in a paper, the more we should get convinced that the data has low veracity.

Klaassen (2015) writes “we apply the by now standard approach in Forensic Statistics” (p. 1). We doubt very much, however, that an approach that can only increase confidence in a defendant’s guilt could be a standard approach in court.

We consulted an expert in Bayesian statistics (s/he preferred not to disclose her name). S/he found the V method problematic, and noted that quite contrary to the V method, typical Bayesian methods would allow both upward and downward changes in one’s confidence in a prior hypothesis.

In their letter, PKW defend the V method by saying that it has been used in the Stapel and Smeesters cases. As far as we know, however, in these cases there was other, independent evidence of fraud (e.g., Stapel reported significant effects with t-test values smaller than 1, in a Smeesters’ data individual scores were distributed too evenly; see Simonsohn, 2013) and the V method was only supporting other evidence. In contrast, in our case, labeling the papers in question as having “low scientific veracity” is almost always based only on V values – the second method for testing “ultra-linearity” in a set of studies (ΔF combined with the Fisher’s method) either could not be applied due to a low number of independent studies in the paper or was applied and did not yield a reason for concern. We do not know what weight the V method received in the Staple and Smeesters cases (relative to other evidence), and whether all the experts who examined those cases found the method useful. As noted before, a statistician we consulted found the method very problematic.

The authors of Report-R do acknowledge that combining V values becomes problematic as the number of studies increases (e.g., p. 4) and explain in the PKW letter that “the conclusions reached in the report are never based on overall evidential values, but on the (number of) evidential values of individual samples/sub-experiments that are considered substantial”. They nevertheless proceed to compute overall V’s and report them repeatedly in Report-R (e.g., “The overall V has a lower bound of 9.93″, p. 31; “The overall V amounts to 8.77″, on p. 66). Why?

- The criteria for “low veracity” are too inclusive

… applying the V method across the board would result in erroneously retracting 1/12-1/19 of all published papers with experimental designs similar to those examined in Report-R (before taking into account those flagged as exhibiting “inconclusive” evidence).

In their letter, PKW write “these probabilities are in line with (statistical) standards for accepting a chance-result as scientific evidence”. In fact, these p-values are higher than is commonly acceptable in science. One would think that in “forensic” contexts of “fraud detection” the threshold should be, if anything, even higher (meaning, with lower chance for error).

Report-R says “When there is no strong evidence for low scientific veracity (according to the judgment above), but there are multiple constituent (sub)experiments with a substantial evidential value, then the evidence for low scientific veracity of a publication is considered inconclusive (p.2).” As already mentioned, UvA plans to ask journals to consider retraction of such papers. For example, in Denzler, Förster, and Liberman (2009) there are two Vs that are greater than 6 (Table 14.2) out of 17 V values computed for that paper in Report-R. The probability of obtaining two or more values of 6 or more out of 17 computed values by chance is 0.40. Let us reiterate this figure – 40% chance of type-I error.

Do these thresholds provide good enough reasons to ask journals to retract a paper or consider retraction? Apparently, the Executive Board of the University of Amsterdam thinks so. We are sure that many would disagree.

An anecdotal demonstration of the potential consequences of applying such liberal standards comes from our examination of a recent publication by Blanken, de Ven, Zeelenberg, and Meijers (2014, Social Psychology) using the V method. We chose this paper because it had the appropriate design (three between-subjects conditions) and was conducted as part of an Open Science replication initiative. It presents three failures to replicate the moral licensing effect (e.g., Merritt, Effron, & Monin, 2010) . The whole research process is fully transparent and materials and data are available online. The three experiments in this paper yield 10 V values, two of which are higher than 6 (9.02 and 6.18; we thank PKW for correcting a slight error in our earlier computation). The probability of obtaining two or more V-values of 6 or more out of 10 by chance is 0.19. By the criteria of Report-R, this paper would be classified as showing “inconclusive evidence of low veracity”. By the standards of UvA’s Executive Board, which did not seek any confirming evidence to statistical findings based on the V method, this would require sending a note to the journal asking it to consider retraction of this failed replication paper. We doubt if many would find this reasonable.

It is interesting in this context to note that in a different investigation that applied a variation of the V method (investigation of the Smeesters case) a V = 9 was used as the threshold. Simply adopting that threshold from previous work in the current report would dramatically change the conclusions. Of the 20 V values deemed “substantial” in the papers we consider here, only four have Vs over 9, which would qualify them as “substantial” with this higher threshold. Accordingly, none of the papers would have made it to the “strong evidence” category. In addition, three of the four Vs that are above 9 pertain to control conditions – we elaborate later on why this might be problematic.

- Dependence of measurement errors does not necessarily indicate low veracity

Klaassen (2015) writes: “If authors are fiddling around with data and are fabricating and falsifying data, they tend to underestimate the variation that the data should show due to the randomness within the model. Within the framework of the above ANOVA-regression case, we model this by introducing dependence between the normal random variables ε ij , which represent the measurement errors” (p. 3). Thus, the argument that underlies the V method is that if fraud tends to create dependence of measurement errors between independent samples, then any evidence of such dependence is indicative of fraud. This is a logically invalid deduction. There are many benign causes that might create dependency between measurement errors in independent conditions. ……

See the entire response: Response to a Report Published by the University of Amsterdam.

Klaassen, C. A. J. (2015). Evidential value in ANOVA-regression results in scientific integrity studies. arXiv:1405.4540v2 [stat.ME].

Discussion of the Klaassen method on pubpeer review https://pubpeer.com/publications/5439C6BFF5744F6F47A2E0E9456703

Some previous posts on Jens Förster case:

- May 10, 2014: Who ya gonna call for statistical Fraudbusting? R.A. Fisher, P-values, and error statistics (again)

- January 18, 2015: Power Analysis and Non-Replicability: If bad statistics is prevalent in your field, does it follow you can’t be guilty of scientific fraud?

Filed under: junk science, reproducibility Tagged: Jens Forster